Policy Gradient Methods

Policy Gradient Methods

In summary, I guess because 1. policy (probability of action) has the style:, 2. obtain (or let's say 'math trick')

in the objective function (

i.e., value function

)'s gradient equation to get an 'Expectation' form for

:

, assign 'ln' to policy before gradient for analysis convenience.

Notation

J(θ): any policy objective function of θ (vector).

: step-size parameter.

: policy gradient.

: ascending the gradient of the policy.

: action policy.

Usually, probability of action looks like:

(a)

Soft-max policy, weight actions use linear combination of features x

or

(b)

continuous action, Gaussian distribution policy

so

so

We get score function .

For (a)

For (b)

The results are the same as the equations at the page 336 from Chapter 13. Policy Gradient Methods. Reinforcement Learning: an introduction, 2nd, Richard S. Sutton and Andrew G. Barto.

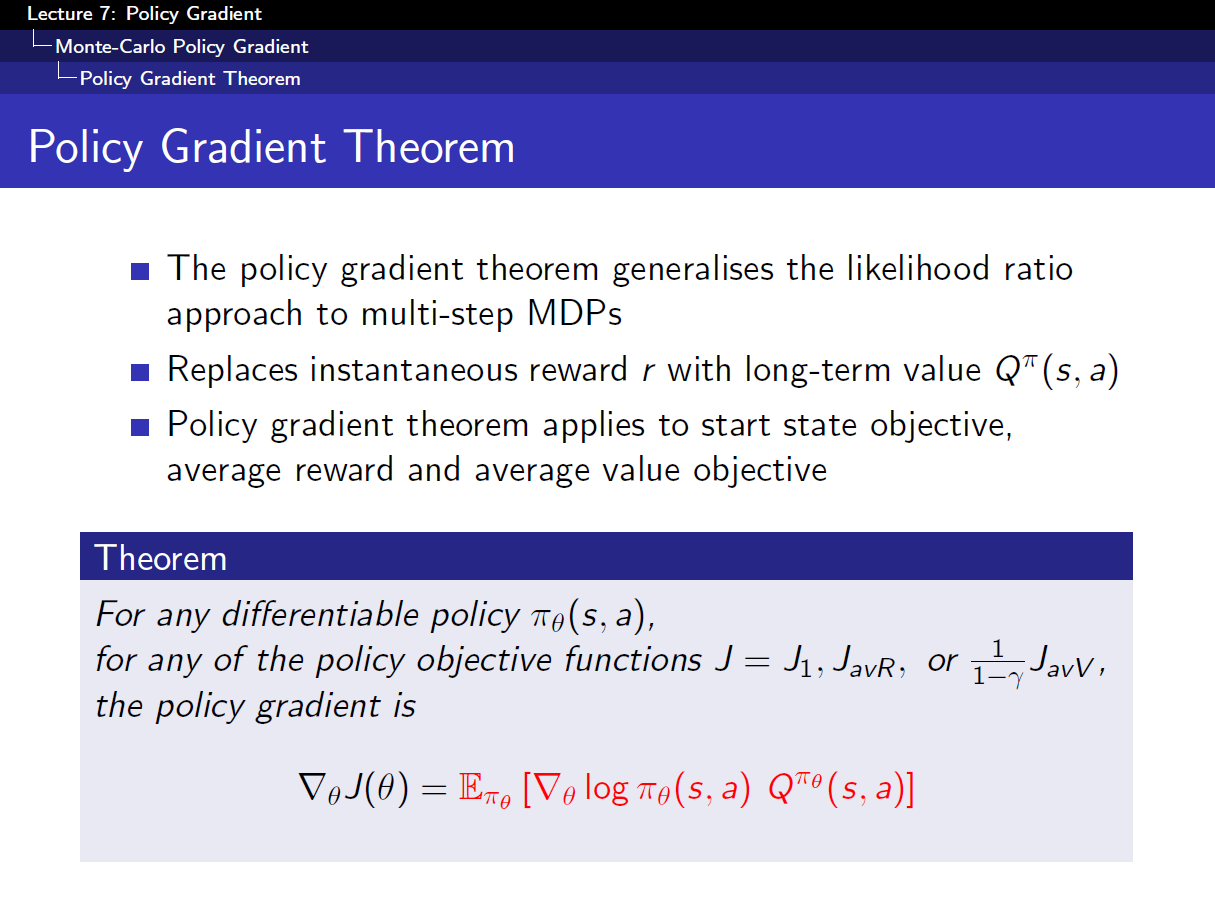

For one-step MDPs:

For any of the policy objective functions:

so

so, we get algorithm for updating θ:

In summary, I guess because 1. policy (probability of action) has the style:, 2. obtain (or let's say 'math trick')

in the objective function (

i.e., value function

)'s gradient equation to get an 'Expectation' form for

:

, assign 'ln' to policy before gradient for analysis convenience.