KL Divergence

KL Divergence

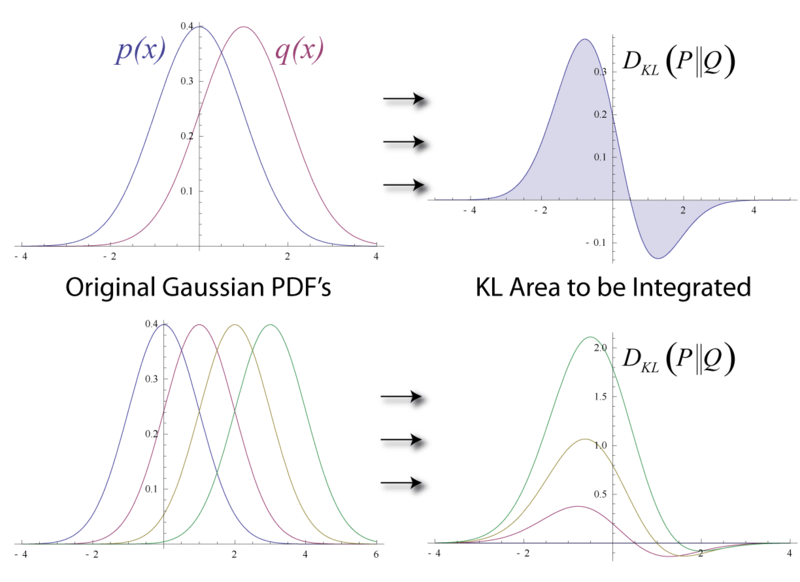

In mathematical statistics, the Kullback–Leibler divergence (also called relative entropy) is a measure of how one probability distribution is different from a second, reference probability distribution.

https://en.wikipedia.org/wiki/Kullback%E2%80%93Leibler_divergence

Information entropy

KL Divergence