What comes to mind when you think of light? Perhaps the vision of yourself basking in the sun on a California beach. Or the most recent book of Michelle Obama “The Light We Carry”. Or even the metaphorical light we’re not supposed to follow when our time comes. Here, we are going to talk about a concept even more fundamental: the physics of light.

Light, with its dual nature as both a wave and particles known as photons, exists in different “forms”, each characterized by its unique wavelength or color. For instance, the wavelength of the visible light, which is the electromagnetic radiation detectable by the human eye, ranges from 400 to 700 nanometers.

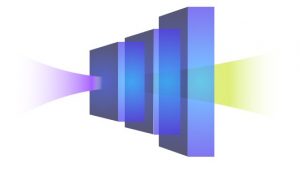

Fascinatingly, we can manipulate light and change its wavelength using a powerful tool: nonlinear optics. Inside nonlinear materials light behaves in a “nonlinear” manner, meaning that its response is not directly proportional to the input. In such systems, the properties of light change as it interacts with the material, resulting in nonlinear phenomena. For example, photons interacting with a nonlinear material can be transformed, with a certain probability, into new photons with twice the frequency of the initial photons in a process called Second Harmonic Generation (SHG). Another phenomenon, even more intriguing, is the Spontaneous Parametric Down-Conversion (SPDC), in which one photon of higher energy is converted into a pair of entangled photons of lower energy. What makes these entangled photons unique is their intrinsic interconnection, even when they are separated by vast distances, or even placed at opposite corners of the universe. Changing the state of one of them will result in an instant change in the state of the other photon.

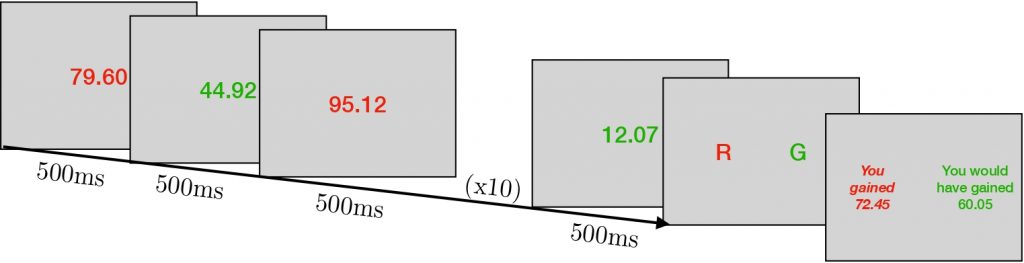

Nowadays, SPDC is especially relevant since it’s at the heart of many applications, for instance in quantum cryptography for secure communications and also for testing fundamental laws of physics in quantum mechanics. Inducing these nonlinear behaviors requires crystals with large thickness, typically of the order of a few millimeters. This requirement is due to the relatively low nonlinearity of these materials. Indeed, when the nonlinearity of a material is low, a larger volume of material is required in order to achieve an equivalent level of efficiency. This limitation has been overcome thanks to the innovative work of Chiara Trovatello, a Columbia postdoc from the Schuck Lab. She and her colleagues have successfully developed a method for generating nonlinear processes, such as SHG and SPDC, in highly nonlinear layered materials with remarkable efficiency, down to sizes as small as 1 micrometer – a thousand times thinner than a millimeter! To put this in perspective, the materials that Chiara and her colleagues have realized are 10-100 times thinner than standard nonlinear materials with similar performances.

While materials with a high conversion efficiency were already well-known in the field, achieving macroscopic efficiencies over microscopic thicknesses was still an open challenge. The critical component was to find a way to achieve the so-called phase matching condition in microscopic crystals with superior nonlinearity. Phase matching ensures efficient conversion of light by maintaining the fields of the interacting light waves in-phase during their propagation inside the nonlinear crystal. To this end, Chiara and her colleagues designed and realized multiple layers of crystalline sheets, stacked with alternating dipole orientation. With this procedure, they were able to change the way the material responds to light, meeting the phase matching condition over microscopic thicknesses. This methodology provides high efficiency in converting light at the nanoscale, and unlocks the possibility of embedding novel miniaturized entangled-photon sources on-chip. It paves the way for novel technologies and more compact optical devices, for applications in quantum optics. Projecting ourselves into the future, it’s not hard to envision a world where everyone will carry a sophisticated quantum device in their pockets, this time embracing the physical light rather than running away from it.

Reviewed by: Trang Nguyen